Do you want to take a leap of faith, or become an old man, filled with regret, waiting to die alone?

I wanted to write down some thoughts about a couple of threads that I've been pulling on recently.

At some level, the best people I have known weigh their personal utility function against their own mortality. Humans have a lot of different ways of coping with death. The practice of death meditation is something that the stoics advocated for as a core tenet of leading a virtuous life. Christians have a concept of memento mori, which the title of this blog post is derived from. A related religious practice is the Maraṇasati, one of the Buddhist Five Remembrances, which has grown to encompass a variety of practical exercises for clarifying the reality of your own mortality to yourself.

I've had the pleasure to work with and get to know some amazingly intelligent, driven people, and have watched a lot of different life paths unfold. Great engineers see ambiguity and tradeoffs in most things, they care about getting to the essence of problems, and they are frustrated when people don't think about the world in the same way. One of my favorite YouTube videos about this is the red lines skit - the humor comes from the difficulty that The Expert has in trying to get the other people in the room to think deeply about what they are talking about. Like talking to people who are inside Plato's Cave. It's not that doing engineering necessarily makes you into this type of person - there are plenty of engineers who are dogmatic, egotistical, and lack basic emotional regulation - it's just been my observation that those types of people don't seem to make it to the highest levels. At some point, maturing into a really great engineer requires intellectual honesty, which in turn requires self-mastery, which can be really difficult.

These two threads have come together for me recently as I've been spending more time reflecting on my internal framework for living a virtuous life. I feel a tremendous amount of pressure to get the next few years right. I believe we are on the brink of a hard takeoff in AI capabilities, and the next few years will be the highest-leverage time in my own life, and also possibly in the history of humanity. On top of that, there are remarkably few people who are actually able to exert any leverage on these events. Even in Silicon Valley, the vast majority of engineers and investors are grappling with the feeling that the world is changing faster than they can keep up, and living in the dark forest of uncertainty around what AI capabilities might be unleashed with the next OpenAI, Anthropic or Gemini announcement. A symptom of this is the influx of funding and talent into robotics, despite no particularly compelling stories around revenue - for many people, it feels like one of the last places that might be safe when the next LLM release automates everything else.

My own feelings on this are complex. On the one hand, I feel incredibly lucky to have developed an interest in deep learning at one of the best possible times to do so. I will likely make more money this year than either of my parents made in their entire life - there are a huge number of other life trajectories I could have gone down which would have resulted in me possessing a lot less agency than I do today.

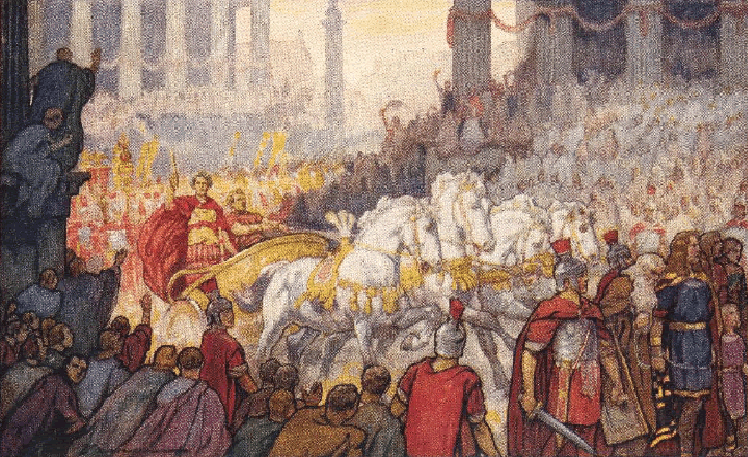

On the other hand, I have a tremendous feeling of failure, unease, and the kind of restlessness that accompanies a visceral awareness of one's own mortality - hominem te esse memento, remember that you are but a man. I planned to work on K-Scale for the rest of my life - it was the thing I wanted to compound, because it feels like the end game, and I want to play a large part in shaping it. It's quite obvious to me even today that all of AI will converge towards humanoids, it's just a matter of time horizon. This was not actually the first idea I had - in fact, I initially didn't think I should work on robotics, but after I dipped my toes into the startup world and felt that funding would not be an insurmountable barrier,1 I shifted my focus to optimize for ambitious, long-horizon ideas that could potentially be trillion-dollar companies, and settled on K-Scale.

The problem was that everything else I could imagine working on, besides helping to build AGI at a frontier lab, really seemed like a short-term project that was not going to compound into something great long-term. There are lots of picks-and-shovels companies to be built, of course, and I probably have some insight into what kinds of picks-and-shovels the AI race might need,2 but I also think that most of these picks-and-shovels companies have a short shelf life. So the feeling of failing to raise a Series A, when K-Scale was the only thing I could picture building that could compound into a long-term project, was like being stuck at the bottom of a dark lake trying to swim for the surface - as if the chance to do something unique to myself, instead of just working for someone else, was snatched away before I could even get it off the ground.

There's some Chinese slang that I recently learned, 白月光. It literally means "White Moonlight", and, as I understand it, refers to the nostalgic, melancholy, idealized feeling of remembering a woman you loved but never had. I don't know if that is how I'll end up feeling about K-Scale, once the pain subsides. Right now I just feel a very strong desire to prove that I am capable of accelerating humanity's progress towards a post-scarcity future, at a moment when it is uniquely possible to do so.

And in case it is not clear - I have a huge amount of graditude towards everyone that believed in K-Scale and contributed towards trying to make it a success, and despite how it turned out, I do believe that we built something beautiful. At it's peak, K-Scale embodied everything I loved about working at Tesla - the messiness, the naivete, the unbridled ambition, the two-week sprints and impossible deadlines, and the overpowering feeling of being alive.

Footnotes

In fact, it still is not clear to me why I had so much trouble doing so, and this is one of the biggest barriers to me deciding to work on another startup. ↩

Did you know that, at K-Scale, I was the first customer for David AI, and the one who suggested that they should look into two-player speech data instead of robotics data? I really should have angel invested. ↩